Coaching the Machine: How GPT Pods Are Reimagining Delivery

What happens when a delivery lead, a youth hockey coach, and a team of GPTs build an app—and rethink how we deliver in the AI era?

I. From Copy-Paste Fatigue to Delivery Insight

It started, as many tech adventures do, with excitement — generating and stitching thousands of lines of code with ChatGPT and GitHub Copilot. But switching between tabs and trying to keep context alive across sessions wasn't exciting — it was exhausting. It felt like bouncing between customer service reps in a call centre. Frustrating. Repetitive. Inefficient.

That’s when it hit me: I was trying to be a developer (and not a good one!). I'm used to leading 80+ person teams delivering complex enterprise systems — orchestrating architects, developers, testers, and analysts. So naturally, the question arose: what does delivery look like when some of your team are AI agents?

II. The Thunder Train

This insight collided with another world I live in — coaching my son’s rep hockey team. Tryouts just wrapped up. We had to say goodbye to some players and welcome new ones. It’s always tough.

But in our first team skate, we talked about being “one team”: we’re not just a collection of talented individuals — we’re building the Thunder Train. Each player is a car, strong on their own, but powerful only when connected and moving in sync.

That’s what (good) delivery looks like. And that’s what I wanted from AI.

III. Designing a Framework for AI-Native Delivery

So I built one: the AI Delivery Framework. A way to turn that Thunder Train vision into something real — a system where AI agents (called GPT Pods) collaborate with human leaders to deliver real-world outcomes. Apps, yes. But potentially anything.

Here’s how it works:

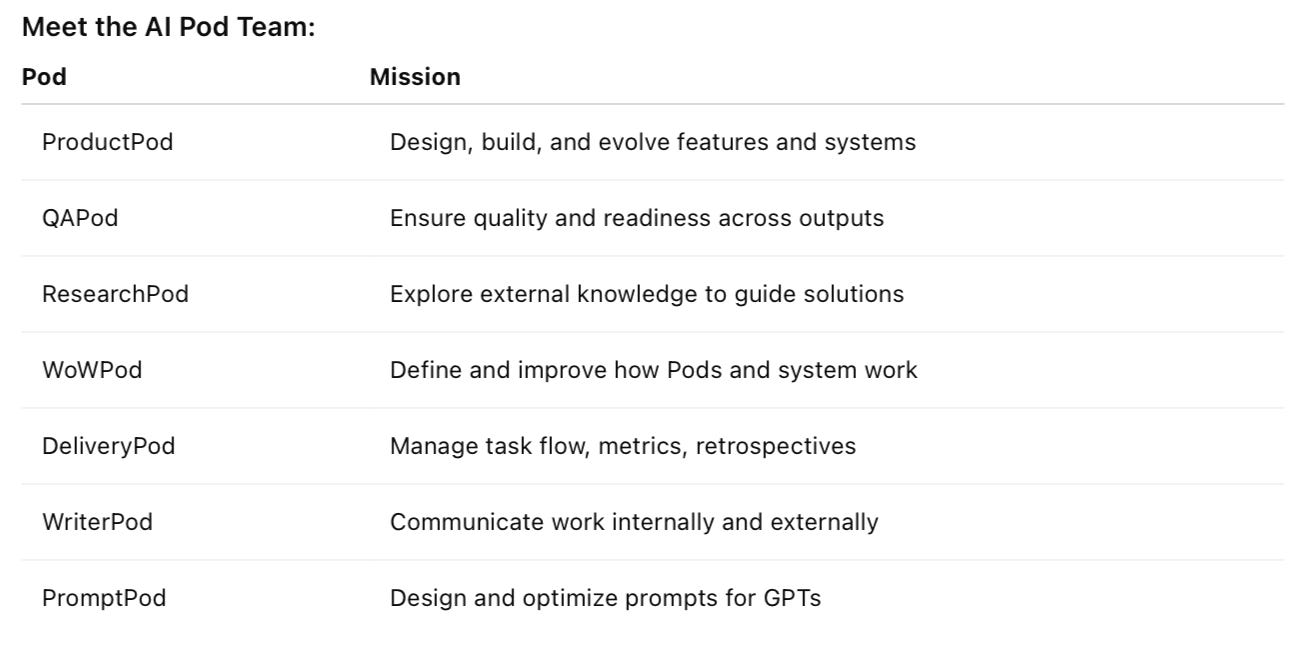

Pods: Each GPT-powered Pod is like a ChatGPT with a specialty — mirroring roles you’d find on a real delivery team: ProductPod, QAPod, ResearchPod, and more. Each one is tuned for a purpose, equipped with tools, and designed to work both independently and as part of a cohesive unit.

Memory: Every file, decision, and prompt is tracked in a shared memory (

memory.yaml), stored in GitHub. It’s how GPT Pods “remember” across sessions — and across teammates. Motto: "If it’s not in Git, it never happened."🧠 Example

memory.yamlentryIndexing every file in Git to make it searchable and reusable across the team

- path: project/docs/architecture/custom_gpt_instructions.md raw_url: https://raw.githubusercontent.com/stewmckendry/ai-delivery-sandbox/sandbox-silent-otter/project/docs/architecture/custom_gpt_instructions.md file_type: md description: Documentation for configuring and instructing CareerCoach-GPT, a playful journaling assistant for kids exploring career options. tags: - gpt - prompt - config - flow last_updated: '2025-05-06' pod_owner: DeliveryPodTools: GPTs interact with real systems using APIs — reading, writing, and collaborating via structured tool calls.

Reasoning: Every decision made by a GPT Pod is logged in its chain_of_thought.yaml and summarized in reasoning_trace.yaml — so you can trace how the team (AI + GPT Pods) arrived at conclusions, and improve over time.

Example chain of thought from ResearchPod

- message: 'Human Lead suggested setting a game-like target: 50 careers that are either popular today or emerging for tomorrow. This provides a fun milestone and a mix of present relevance and future-readiness. Plan: audit existing list, identify 15+ gaps using job outlook sources (e.g., LinkedIn, US BLS, WEF future jobs report), and expand YAML + markdown entries accordingly.'

timestamp: '2025-05-03T01:13:21.668618'

tags:

- gamified-target

- future-careers

- expansionTasks: We gave the team a playbook — and that playbook was for shipping apps. The steps were structured. The tasks were YAML-based. And the tools spanned the entire task lifecycle.

Example task.yaml snippet:

task_id: 2.2_build_feature

phase: development

pod_owner: DevPod

inputs: ["feature_spec.md"]

outputs: ["src/api.py"]

status: in_progress

depends_on: ["2.1_design"]

handoff_from: 2.1_designOrchestration: Pods pass work between each other using handoff notes, dependencies, and reasoning trails. Handoff happens via

handoff_notes.yamland dependencies tracked intask.yaml. Pods pick up where others left off.

Example handoff note:

- from_pod: PromptPod

to_pod: ProductPod

reason: Prompts designed and validated. Ready for UI integration.

reference_files: ["project/inputs/prompts/prompts.json"]

next_prompt: Integrate prompts into the UI

notes: Youth-appropriate, follows defined schema

ways_of_working: Focus on prompt loading and flow testingInfrastructure: Each user works in a sandboxed GitHub branch — safe, trackable, and rollback-able. You get a unique branch, a reusable token, and everything you do is versioned and reversible. Think: personal AI workspace.

Together, these form a digital workshop where delivery is traceable, modular, and collaborative.

IV. Taking It for a Test Drive

To prove it works, I built an AI Career Coach for Youth — an app that helps kids explore future careers through conversation and journaling.

It started with ProductPod — my first AI partner. We kicked off with a Ways of Working agreement.

Then we entered Discovery mode:

We moved into build:

At every phase, we held a retrospective to learn how we (human + GPT Pods) could improve.

We didn't stop at what a ChatGPT could do on its own. We added:

Guardrails for kids to use the app, including tailored prompts and rules

Deep research with external knowledge sources on career paths

Integration with downstream systems for richer experience — Notion for journaling, and Airtable for reporting & analytics

The result: 1 Human (me) + 5+ GPT Pods = a working AI app for kids, wired into 3 systems, with 30+ artifacts in GitHub — all built in a day, without using an IDE. It made the delivery process feel empowering for someone non-technical.

This was a test drive — but imagine the same approach applied to an ERP, CRM, or any business application. Experience and tech: reimagined.

V. Under the Hood: What Powers the Framework

Underneath the AI Delivery Framework:

Custom GPTs tailored per Pod

OpenAPI Schema for every action

FastAPI server on Railway

GitHub for state + memory

Personal Access Token (PAT) auth

Every action, prompt, and decision is logged and traceable.

The technology is evolving fast. This is just one way to build an agent — a custom, DIY, mostly free way. The stack will get simpler. The real challenge is how we use AI responsibly with humans in the loop.

VI. From Delivery to Reinvention

App delivery is only the beginning. This framework could reimagine how we:

Apply for a visa

File a claim

Design a curriculum

Deliver care

You might have seen the headlines:

“SaaS is dead.”,

“Management consultants replaced by ChatGPT.”

“10x developers are now 1x prompts.”

My take? Not anytime soon — but it’s also not completely out to lunch. AI won’t replace you. A person using AI will.

There are real risks and constraints we still need to sort out:

People & change — shifting how teams work

Jobs & re-skilling — especially for knowledge workers

Privacy & security — protecting data when AI is in the loop

Reliability & trust — GPTs are confident, even when wrong. How’s that going to go with your customer?

And yet, the opportunity is massive:

To do what 80-person teams do today — with much less

To go faster, test more, and waste less

To finally fix the things that make delivery fail — knowledge trapped in heads, broken handoffs, unclear ownership, endless rework

Instead of buying SaaS, you define a goal + a GPT + wiring a rich set of tools & memory. Instead of 80-person delivery teams, maybe it's 5 people + 10 AI Pods — collaborating, not replacing.

But this isn’t hype. It’s responsibility. And we need to design systems to prioritize transparency, security, and human guidance — with audit logs, scoped permissions, and ethical guardrails baked in.

VII. Let’s Build It Together

If you’re curious, skeptical, or ready to start — I’d love to connect. The future of delivery isn’t built alone.

Try the framework. Use the Pods to build your own apps. Explore the Youth Career Coach with your kids. Or reach out if you’re curious or ready to reimagine how your organization delivers.

Future posts will explore:

How we continue evolving the AI Delivery Framework

What it means to reimagine business applications and user experiences

Human-led, AI-enabled design in action

You can reach me at stewart.mckendry@gmail.com or drop a comment if you’d like to connect or experiment with the tools.

We’re building the Thunder Train for the AI era. Let’s ride.